Dongkwan Kim

AI Agents for Bug Discovery & Exploitation

LLM Dev Infra

Postdoc at Georgia Tech

BCDA: The AI Detective Separating Real Bugs from False Alarms

🎯 From Potential Sink to Actionable Intelligence BCDA (Bug Candidate Detection Agent)’s core mission is to address the fundamental challenge of lightweight sink analysis: distinguishing real vulnerabilities from false-positive noise. When MCGA, our cartographer, flags a function containing a potentially vulnerable “sink” (such as a function that executes system commands), BCDA takes over. Its job isn’t just to say “yes” or “no.” BCDA performs a deep, multi-stage investigation powered by LLMs to produce a Bug Inducing Thing (BIT). A BIT is a high-fidelity, structured report detailing a confirmed vulnerability candidate. It includes the exact location, the specific trigger conditions (like if-else branches), and a detailed analysis generated by LLMs. This report becomes a detailed guide for our demolition expert, BGA, and the fuzzing stages.

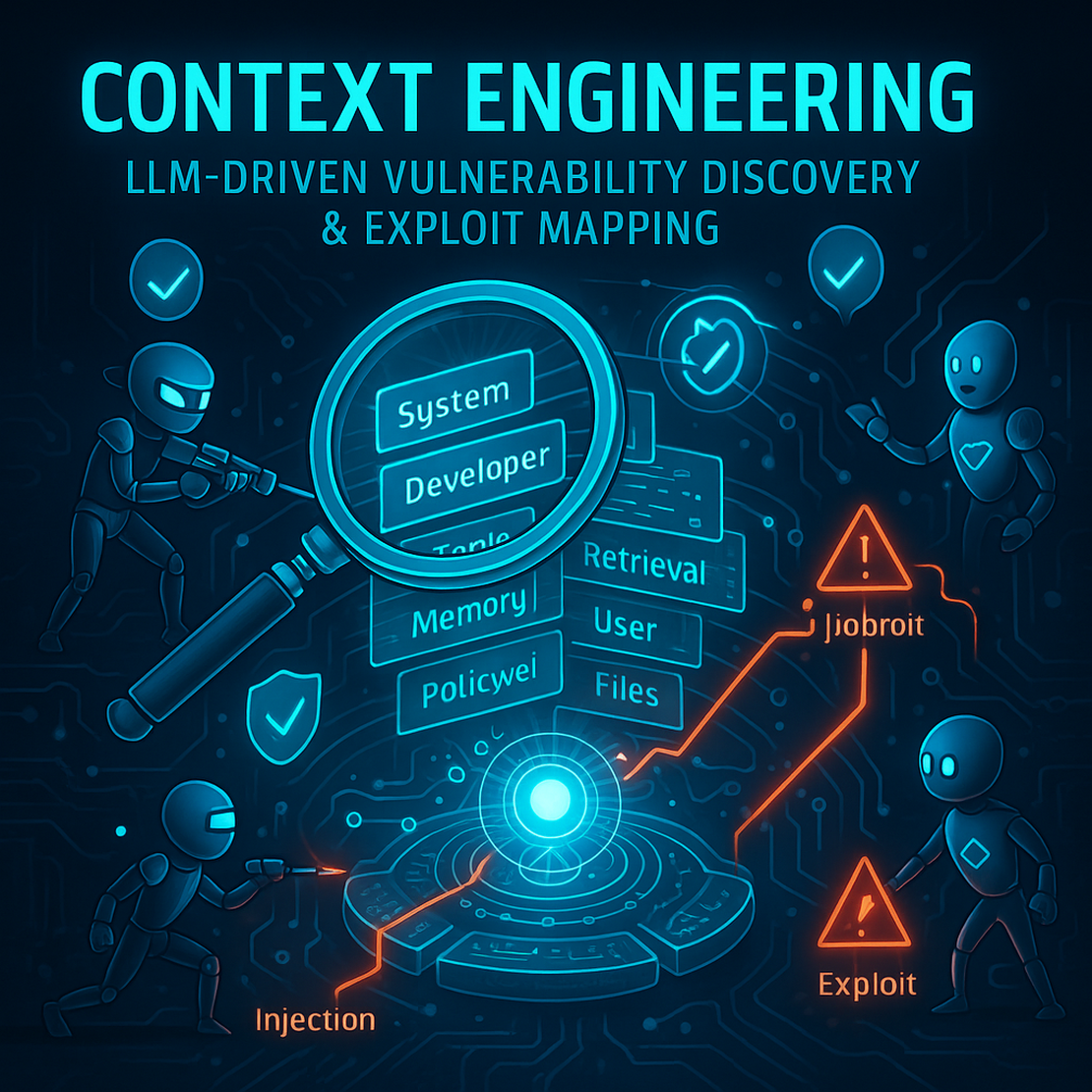

Context Engineering: How BGA Teaches LLMs to Write Exploits

The Problem with Teaching AI to Hack Teaching an LLM to write working exploits is more challenging than typical AI tasks. Unlike most applications where “close enough” works, vulnerability exploitation requires precise execution. A single character error can make an entire exploit fail. Take this seemingly simple Java reflective call injection vulnerability: String className = request.getParameter("class"); Class.forName(className); // BUG: arbitrary class loading This looks straightforward, but there’s a catch: to exploit this vulnerability, the LLM must load the exact class name "jaz.Zer" to trigger Jazzer’s detection. Not "jaz.Zero", not "java.Zer", not "jaz.zer". One character wrong and the entire exploit fails.

BGA: Self-Evolving Exploits Through Multi-Agent AI

🔄 Where BGA Fits in the MLLA Pipeline Before we dive into BGA’s self-evolving exploits, here’s how it fits into the broader MLLA vulnerability discovery pipeline: Discovery Agents (CPUA, MCGA, CGPA) → Detective (BCDA) → Exploit Generation (BGA) Discovery agents map the codebase and identify potential vulnerability paths BCDA investigates these paths, filtering false positives and creating Bug Inducing Things (BITs) with precise trigger conditions BGA receives these confirmed vulnerabilities and generates self-evolving exploits to trigger them Now BGA takes the stage, armed with BCDA’s detailed intelligence about exactly what conditions must be satisfied to reach each vulnerability.

MLLA: Teaching LLMs to Hunt Bugs Like Security Researchers

When Fuzzing Meets Intelligence Picture this: you’re a security researcher staring at 20 million lines of code, hunting for vulnerabilities that could compromise everything from your smartphone to critical infrastructure. Traditional fuzzers approach this challenge with brute force – throwing millions of random inputs at the program like a toddler mashing keyboard keys. Sometimes it works. Often, it doesn’t. But what if we could change the game entirely? Meet MLLA (Multi-Language LLM Agent) – the most ambitious experiment in AI-assisted vulnerability discovery we’ve ever built. Instead of random chaos, MLLA thinks, plans, and hunts bugs like an experienced security researcher, but at machine scale.